AI Deep Reasoning

for your Data

HybridRAG retrieval with multi-hop reasoning and per-claim citations

Trusted by developers at

Why Traditional AI Fails Your Team

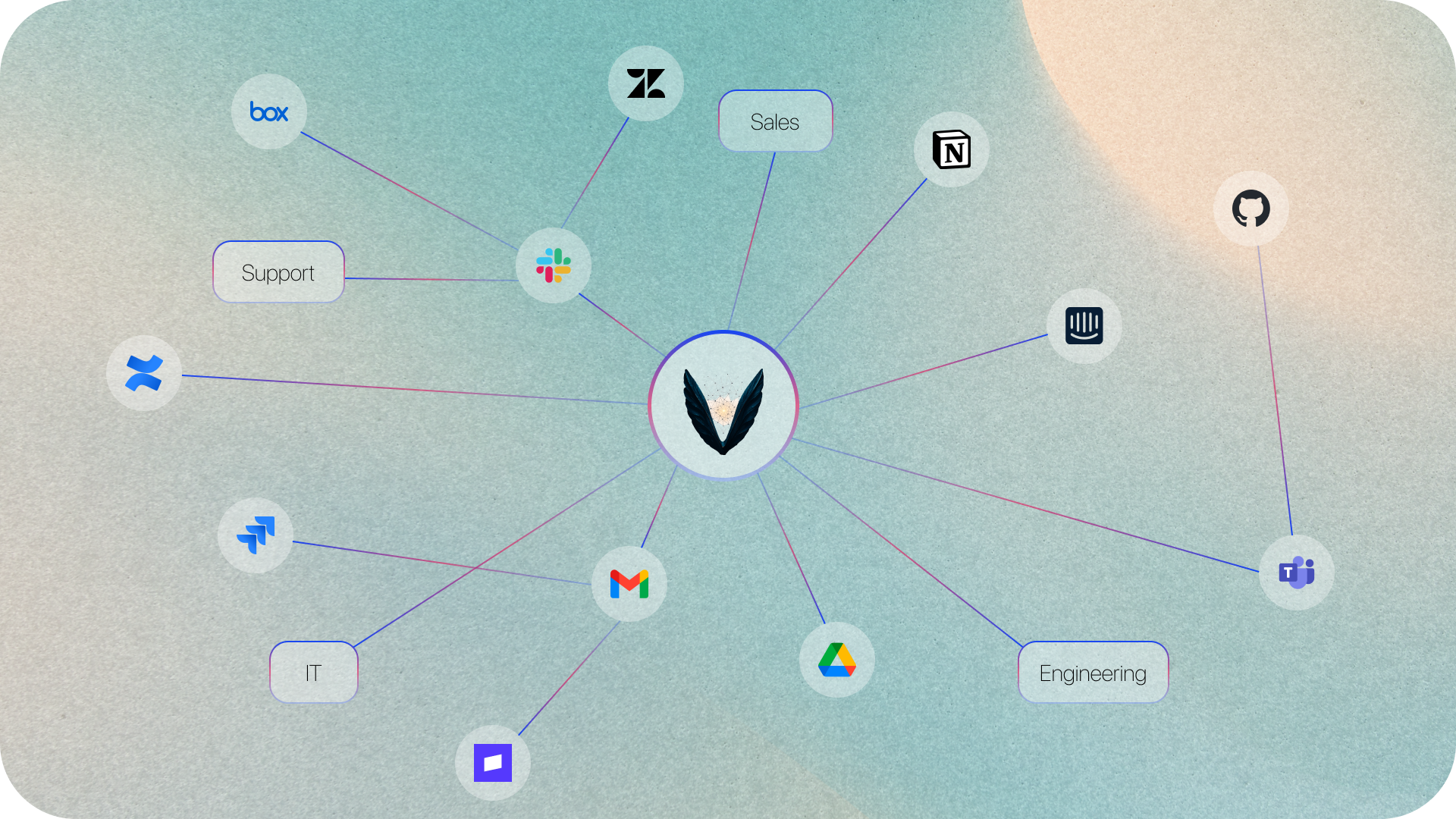

Your company data lives in dozens of disconnected apps. No AI can see across all of them—until now.

Your Data Is Scattered Everywhere

Slack, Notion, Drive, Salesforce, Zendesk, Jira... Your team's knowledge is scattered across dozens of disconnected tools.

Search Isn't Reasoning

Most AI tools retrieve text snippets based on keyword matching. They find documents—but can't connect the dots. Complex questions that require tracing relationships across your data get fragments, not answers.

Building This In-House? Don't.

Custom RAG pipelines take 6-12 months, require ML expertise, and you'll still spend months building integrations. Your engineers should be shipping products, not infrastructure.

One Connected Knowledge Base

All Your Apps. One AI That Understands Them.

Multi-Hop Reasoning

AI That Connects the Dots Across Everything

Ready Today, Not Next Year

Skip the Science Project. Start Asking Questions.

from vrin import VRINClient

# Initialize with your API key

client = VRINClient(api_key="vrin_...")

# Insert knowledge from your documents

client.insert(

content="Q4 roadmap includes...",

title="Product Roadmap 2025"

)

# Query with multi-hop reasoning

response = client.query("What's our Q4 roadmap?")

print(response["summary"]) # AI-generated answer

print(response["sources"]) # Source documentsSee VRIN in Action

Watch how VRIN transforms AI applications with persistent memory, user-defined specialization, and expert-level reasoning.

From Raw Data to Expert Insights

VRIN's HybridRAG architecture transforms fragmented information into persistent, intelligent memory.

Connect & Ingest

Universal Data Integration

VRIN ingests from any source—APIs, databases, documents, conversations, Slack threads, customer tickets. No complex ETL pipelines. No data movement. Just simple REST API calls that work with your existing infrastructure.

What's inside VRIN?

Teams choose VRIN because it transforms AI from forgetful assistants into expert systems with persistent memory and deep reasoning.

Facts-First Memory

Knowledge graphs that store facts with provenance, not just embeddings. Build institutional memory that compounds over time.

Multi-Hop Reasoning

Constraint-solver engine that traces relationships across documents and time to answer complex 'why' questions.

AI Specialization

Define domain experts for sales, engineering, finance with custom reasoning patterns and knowledge focus areas.

HybridRAG Routing

Intelligent query analysis routes to optimal path: vector search for similarity, graph traversal for reasoning.

BYOC/BYOK Security

Deploy in your cloud or ours. Complete data sovereignty, zero vendor lock-in, enterprise-grade isolation.

Temporal Consistency

Track how facts evolve over time with automatic conflict resolution and versioning for changing information.

Proven Across Industries

Vrin's memory orchestration platform delivers value across diverse sectors, with specialized demos and case studies.

Healthcare

Transform patient care with persistent memory for clinical conversations, treatment history, and care coordination.

Finance

Enhance financial AI with persistent memory for client relationships, transaction history, and regulatory compliance.

Legal

Revolutionize legal AI with memory for case histories, precedent tracking, and client communication context.

Healthcare Industry Demo

Watch how VRIN transforms AI interactions with persistent memory in the Healthcare Industry.

See how VRIN enhances patient care with persistent clinical memory and specialized AI reasoning

Beyond Traditional RAG Systems

VRIN's hybrid architecture combines the best of vector search and graph traversal, enhanced with user-defined specialization for unmatched domain expertise.

Traditional RAG

- Vector-only retrieval

- No domain specialization

- Limited context understanding

- 68.18 F1 performance

Graph RAG

- Graph-only traversal

- Better for multi-hop queries

- Still lacks specialization

- 71.17 Acc on complex tasks

VRIN Hybrid

- Intelligent query routing

- User-defined AI experts

- Multi-hop reasoning

- 71.17+ Acc with specialization

Architecture Comparison: Traditional vs HybridRAG

Technical analysis of different RAG pipeline architectures, comparing performance, limitations, and architectural components across three distinct approaches.

Traditional RAG Pipeline

Standard vector-based retrieval with limited context understanding and no domain specialization.

System Architecture

Graph RAG Pipeline

Relationship-based traversal system optimized for multi-hop queries but lacks user-defined specialization.

System Architecture

VRIN HybridRAG Pipeline

Intelligent query routing with user-defined AI experts, combining vector search and graph traversal.

System Architecture

Architecture Performance Summary

Comparative analysis across key performance metrics

| Architecture | Accuracy | Speed | Specialization | Multi-hop |

|---|---|---|---|---|

| Traditional RAG | 68.18 F1 | ~2-5s | None | Limited |

| Graph RAG | 71.17 Acc | ~5-10s | None | Good |

| VRIN HybridRAG | 71.17+ Acc | <1.8s | User-Defined | Advanced |

Seamless Integration

Drop Vrin into your existing stack with simple APIs. No complex setup or migration required.

LLM Providers

OpenAI, Anthropic, Cohere, Google AI

Frameworks

LangChain, LlamaIndex, AutoGPT

Cloud

AWS, Azure, GCP, Vercel

Enterprise

Salesforce, SAP, ServiceNow

Choose Your Intelligence Level

From individual developers to enterprise deployments, VRIN scales with your needs. All plans include our revolutionary user-defined AI specialization.

Perfect for developers and small teams getting started

What's Included:

- 100k chunks / 100k edges

- 5k queries/month

- Shared HybridRAG infrastructure

- Basic memory & CBOM

- API key authentication

- CSV/S3 connectors

- Community support

For growing teams that need dedicated infrastructure

What's Included:

- 2M chunks / 3M edges

- 100k queries/month

- Dedicated indices

- Full CBOM & TTL

- Basic RBAC

- + Postgres/Drive connectors

- Email support (48h SLA)

- Extra storage/queries available

For enterprises requiring security and compliance

What's Included:

- 10M chunks / 15M edges

- 500k queries/month

- Dedicated + VPC peering

- Full + compliance exports

- SSO/SAML + SCIM

- + Slack/Jira/Confluence

- Priority support (8-12h SLA)

- Compliance exports, private LLM

Custom solution for large-scale deployments

What's Included:

- Custom (100M+ chunks; 150M+ edges)

- Custom queries (SLA'd)

- Private/VPC or on-premises

- Full + auditor packs

- SSO/SAML, SCIM, data residency

- All + custom connectors

- Dedicated TAM & DSE

- On-prem, managed upgrades

All Plans Include

Revolutionary capabilities that set VRIN apart

Core Intelligence

- User-defined AI specialization

- Multi-hop reasoning across documents

- Smart deduplication (40-60% savings)

- Temporal knowledge graphs

- Lightning-fast fact retrieval (<1.8s)

Enterprise Features

- Complete audit trails

- Explainable AI responses

- High-confidence fact extraction

- Cross-document synthesis

- Production-grade security

Questions about pricing or need a custom solution?

ROI Guarantee: VRIN typically pays for itself within the first quarter through reduced engineering costs, faster time-to-market, and superior analysis quality.